Scientific computing resources for machine protection¶

This documentation describes the provisioning, management, configuration and use of the scientific computing infrastructure for Machine Protection projects and studies, as done in the TE-MPE-CB section. The goals is to enable and support the activities and their requirements in term of scientific computing, such as beam tracking simulations, availability studies, FEM analyses, and machine learning applications.

The service is provided to the members of the machine-protection-studies-users e-group:

Create a request to join the project

The dedicated infrastructure mainly relies on virtualized resources provided by the Openstack CERN private cloud machine-protection-studies and is managed using Puppet. The configuration is built from the top-level Puppet / Foreman hostgroup machine_protection using the workflow and toolchains maintained by IT. Additional computing resources are also provided on the CERN batch system (HT Condor) to support high-performance and high-throughput computing needs (see the HT Condor documentation page).

This documentation is intended both for end-users and for system administrators and developers. The typical use cases, from a user's perspective, are documentated one by one, including for example Ansys, Python, GPU computing and FLUKA. A section is dedicated to specific projects and activities developed in the machine project group. It summarizes and documents specific information on a project-by-project basis and is meant to provide an overview in terms of resource management and to act as a starting point for new users.

First steps¶

New users are encouraged to read through the following sections in order.

Suggested first steps

- Familiarize yourself with the available resources as described in the overview section below

- The getting-started guide will walk you through all the steps to start using the available resources, in particular the Openstack Virtual Machines

- Detailed instructions are available for the main end-user features:

- Read about the concepts and details of your specific project

- Check out the detailed instructions for typical use cases.

System administrators will find detailed information here.

Available resources¶

All the resources are summarized below; including the virtualized computing resources, EOS storage spaces, CVMFS software deployment repositories and the Gitlab central project.

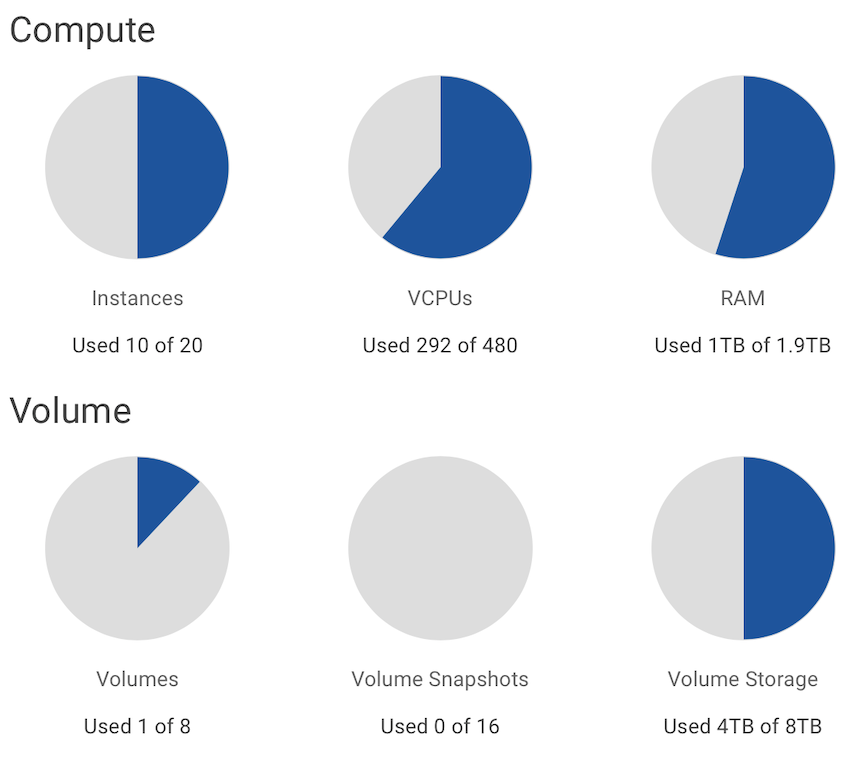

Openstack¶

Openstack Virtual Machines

A complete description of the Openstack Virtual Machines made available to the members of the project is provided in the Openstack resources documentation.

At the moment, the Openstack project provides access to the following resources:

- 480 CPU cores

- 1.9 TB of RAM

- 8 TB of volume storage

- Up to 20 VM instances

- Up to 8 volumes

- Hosts with NVIDIA V100s GPUs (see here)

Virtual machines of the following "flavors" 1 can be instanciated using these resources:

$ openstack flavor list

+------------+--------+------+-----------+-------+-----------+

| Name | RAM | Disk | Ephemeral | VCPUs | Is Public |

+------------+--------+------+-----------+-------+-----------+

| m2.small | 1875 | 10 | 0 | 1 | True |

| m2.medium | 3750 | 20 | 0 | 2 | True |

| m2.large | 7500 | 40 | 0 | 4 | True |

| r2.xlarge | 30000 | 80 | 0 | 8 | False |

| r2.3xlarge | 120000 | 320 | 0 | 32 | False |

| g2.xlarge | 16000 | 64 | 192 | 4 | False |

+------------+--------+------+-----------+-------+-----------+

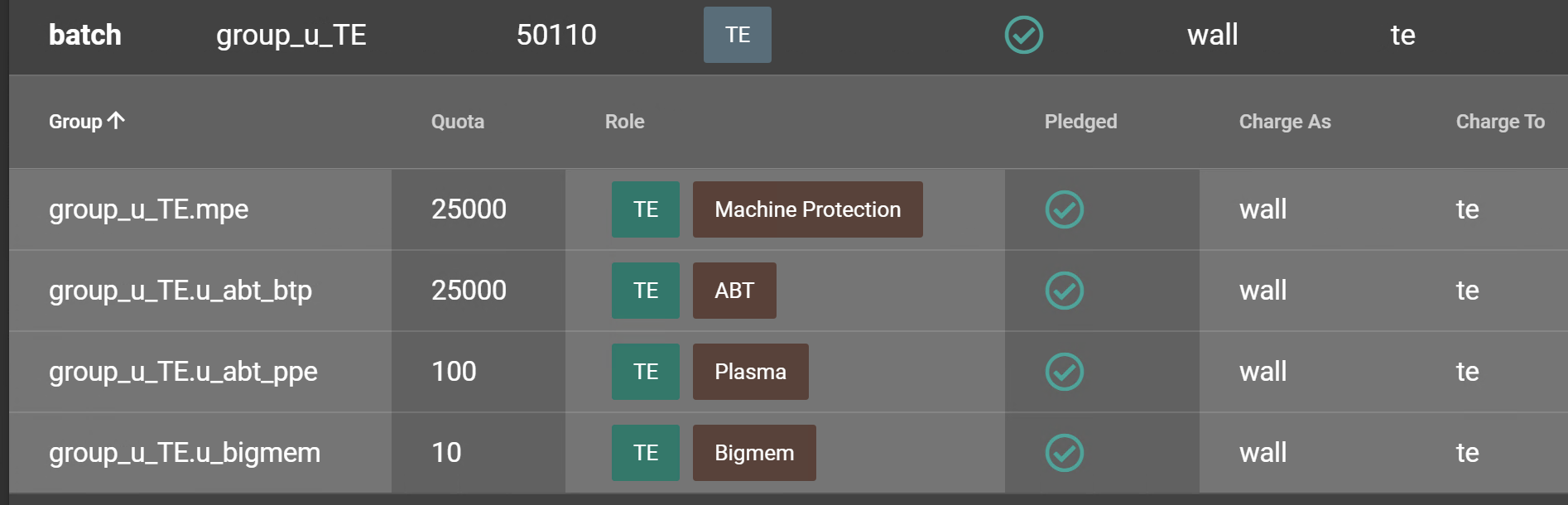

HT Condor¶

In addition, the following HT Condor accounting groups can be accessed to members of the machine protection groups:

group_u_TE.mpe: a fair share priority access to the 5k cores (rough estimate) available for TE;group_u_TE.u_bigmem: big memory nodes for specifics computing applications (e.g. Ansys)- GPU resources: GPU resources are available for use and can be accessed using HT Condor.

HT Condor resources for machine protection

For more details see the user documentation section on HT Condor.

The memberships and available resources of the accounting groups can be viewed on Haggis (more information on Haggis).

Git and gitlab¶

The Gitlab project machine-protection is made available to serve as a central point to organize and version libraries, codes and documentation content. It is described in more detail here.

Interestingly, it should be noted that the CI/CD capabilities of gitlab are extremely valuable to automatize tasks and to provide computational capabilities to otherwise static deployments.

EOS¶

EOS is the disk storage system at CERN for large physics data. It is also used as storage backend for the CERNBox cloud data storage provided to CERN users.

Massive storage space is available on EOS for the machine protection projects:

- Superconducting magnet damage studies (

scmagnetdamagestudies); - Machine protection failure studies (

mp-failure-studies).

The project spaces are hosted on /eos/project-{x}/{name} and can be accessed using an EOS mount or via the CERNBox web interface. On the web interface, the default view is the user's personnal CERNBox, if the user has access rights to project spaces, they will appear under Your projects.

More details can be found the user documentation.

CVMFS¶

CVMFS is a read-only filesystem to deploy software on distribution computing infrastructure (e.g. lxbatch). Scientific software in use for machine protection studies can be deployed on the top-level repository beam-physics.cern.ch. The repository is accessible at /cvmfs/beam-physics.cern.ch on machines where CVMFS is deployed. Additionnally, the repository is mirrored worldwide on the stratum-1 servers.

The following software are available:

BDSIM- available at

/cvmfs/beam-physics.cern.ch/bdsim; refer to the documentation.

Hadoop File System (HDFS)¶

HDFS is a distributed file system which is central to the Hadoop and Spark ecosystems. It is available at CERN and machine protection projects use it together with Spark. IT kindly provides 300 Gb of 3x replicated space on HDFS which can be read for example by a Spark application. Membership to the e-group machine-protection-studies-hdfs should be requested to gain access. The available space is at /project/machine_protection.

-

The flavor names prefixes indicate the following:

gfor GPU-enabled flavors,rfor restricted access. ↩